The Influentists

· 5 min read

Last week, the developer community was busy discussing about a single tweet:

I'm not joking and this isn't funny. We have been trying to build distributed agent orchestrators at Google since last year. There are various options, not everyone is aligned... I gave Claude Code a description of the problem, it generated what we built last year in an hour.

— Jaana Dogan ヤナ ドガン (@rakyll) January 2, 2026

The author is Jaana Dogan (known as Rakyll), a highly respected figure in the Google ecosystem, in the open-source world, and in my heart (thank you Rakyll for your great Go blog posts).

At first glance, the tweet suggests an enormous shift in the software industry: the ability to build in just one hour what previously required weeks or months for a team of sofware engineers, using just the description of the problem. The tweet was too-much dramatic in my own opinion, but actually impressive!

The post triggered an immediate wave of “doom-posting,” with many fearing for the future of software engineering (as each week since a year now). However, as the conversation reached a high number of replies and citations on social networks, Rakyll released a follow-up thread to provide context:

To cut through the noise on this topic, it’s helpful to provide more more context:

— Jaana Dogan ヤナ ドガン (@rakyll) January 4, 2026

- We have built several versions of this system last year. - There are tradeoffs and there hasn't been a clear winner.

- When prompted with the best ideas that survived, coding agents are able to… https://t.co/k5FvAah7yc

This response thread revealed a story far less miraculous than the original tweet suggested. Let’s analyze it.

Crucially, the foundational “thinking” had already been performed by Rakyll herself, who guided the AI using architectural concepts (honed over several weeks or months of prior effort) rather than the AI thinking and inventing the “product” from scratch.

Furthermore, the resulting project was strictly a proof-of-concept that falls far short of a production-ready system capable of managing real-world complexity.

And finally, this success hinged on the Rakyll’s implicit domain knowledge and deep expertise. The last point is often (strategically?) omitted from these “magic” viral demonstrations in order to make the tool appear way more autonomous than it truly is.

Hmm. Now, this is far less exciting…

Under influence #

This pattern of “hype first and context later” is actually part of a growing trend.

I call the individuals participating to that trend “The Influentists”. Those people are members of a scientific or technical community, and leverage their large audiences to propagate claims that are, at best, unproven and, at worst, intentionally misleading.

But how can we spot them?

I personally identify these “Influentists” by four personality traits that characterize their public discourse.

The first is a reliance on "trust-me-bro" culture, where anecdotal experiences are framed as universal, objective truths to generate hype. This is a sentiment perfectly captured by the “I’m not joking and this isn’t funny” tone of Rakyll’s original tweet, but also the dramatic “I’ve never felt that much behind as a programmer” from Andrej Karpathy’s tweet.

This is supported by an absence of reproducible proof, as these individuals rarely share the code, data, or methodology behind their viral “wins”, an omission made easier than ever in the current LLM era.

And finally, they utilize strategic ambiguity, carefully wording their claims with enough vagueness to pivot toward a “clarification” if the technical community challenges their accuracy.

I've never felt this much behind as a programmer. The profession is being dramatically refactored as the bits contributed by the programmer are increasingly sparse and between. I have a sense that I could be 10X more powerful if I just properly string together what has become…

— Andrej Karpathy (@karpathy) December 26, 2025

A growing pattern #

Rakyll is far from alone. We see this “hype-first” approach across major AI firms like Anthropic, OpenAI, or Microsoft.

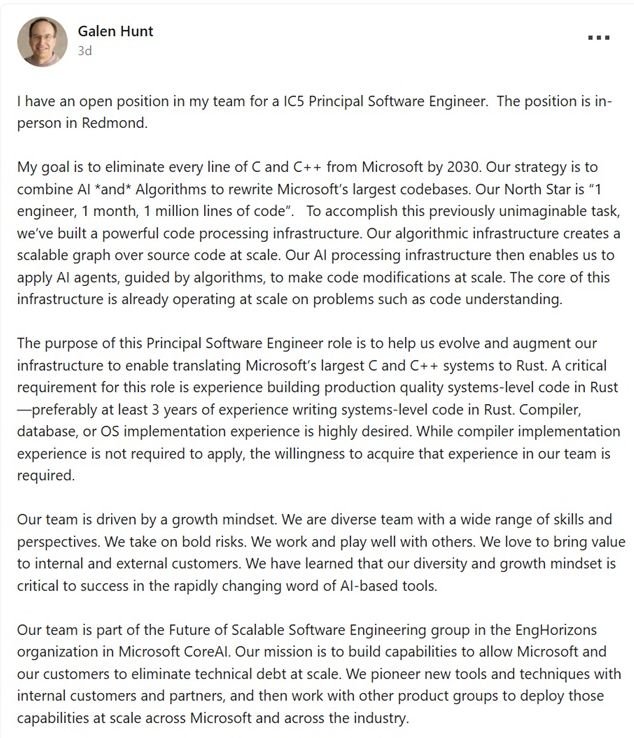

Consider Galen Hunt, a Distinguished Engineer at Microsoft. He recently made waves by claiming a goal to rewrite Microsoft’s massive C/C++ codebases into Rust by 2030 using AI.

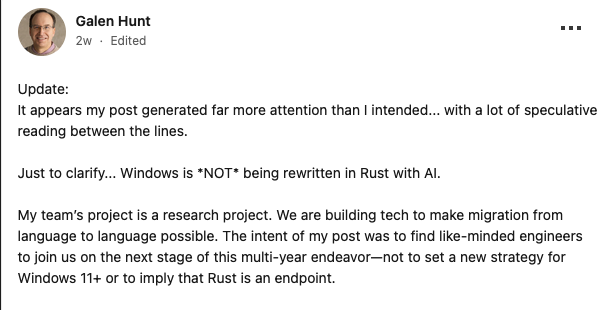

When the industry pointed out the near-impossible complexity of this task, but also asking clarity for popular and critical products like Microsoft Windows, he was forced to clarify that it was only a “research project”.

Similarly, engineers from Anthropic and OpenAI oftenly post teasers about “AGI being achieved internally” to release months later models that disappoint the crowd.

Wait, can we consider seriously the hypothesis that 1) the recent hyped tweets from OA's staff

— Siméon (@Simeon_Cps) September 24, 2023

2) "AGI has been achieved internally"

3) sama's comments on the qualification of slow or fast takeoff hinging on the date you count from

4) sama's comments on 10000x researchers

are… https://t.co/f57g7dXMhM pic.twitter.com/Gap3V7VqkK

Liam, I have been a professional programmer for 36 years. I spent 11 years at Google, where I ended up as a Staff Software Engineer, and now work at Anthropic. I've worked with some incredible people - you might have heard of Jaegeuk Kim or Ted Ts'o - and some ridiculously… https://t.co/Ku8agTrps3

— Paul Crowley (@ciphergoth) December 31, 2025

Similarly, many other companies lie over what they are solving or willing to solve:

The cost of unchecked influence #

When leaders at major labs propagate these hyped-based results, it can create a “technical debt of expectations” for the rest of us. Junior developers see these viral threads and feel they are failing because they can’t reproduce a year of work in an hour, not realizing the “magic” was actually a highly-curated prototype guided by a decade of hidden expertise.

We must stop granting automatic authority to those who rely on hype, or vibes, rather than evidence.

If a tool or methodology were truly as revolutionary as claimed, then it wouldn’t need a viral thread to prove its worth

because the results would speak for themselves.

The tech community must shift its admiration back toward reproducible results and away from this “trust-me-bro” culture.