The AI boredom

· 6 min read

Summary by the author… #

…to avoid wasting energy (and money) on summarization algorithms.

"

I don’t share the same enthusiasm as the tech world about the current generation of Artificial Intelligence (AI) products because:

- most of them do not bring value to people (beyond the ’tech enthusiasts’ world),

- unrealiability and instability are still unsolved issues,

- they primarily profit big — and often unreliable — companies,

- they consume excessive energy,

- ethical and legal challenges are not a concern for big companies. "

Now, the content #

There’s not a day goes by when I don’t hear, or see, the term “Artificial Intelligence” (AI) somewhere. AI is everywhere, is the “new trend” - or “tech bubble” - in the Tech Industry, and here to stay for a while.

However, I’m sorry but I don’t share the tech world’s enthusiasm for the latest AI products. Actually, I feel pretty bored.

For more than a decade now, AI has achieved great progress—from groundbreaking scientific advances (e.g., AlphaGo, AlphaFold, YOLO, live segmentation of videos, etc.) to… ugly content generation for multi-billion-dollar companies.

Unfortunately, AI has now become synonymous with “LLM” (large language models) in the tech world. As a former AI engineer trained in what we now call statistical machine learning and explainable methods, this trend irritates me to no end.

AI was supposed to solve some of our society’s greatest challenges:

- helping people with boring tasks, freeing them to focus on meaningful work,

- detecting diseases and developing drugs for new or rare conditions,

- addressing societal crises with unbiased algorithms for critical decisions,

- identifying and blocking harmful content online in real-time,

- detecting and neutralizing cyberattacks in real-time,

- or assisting people in distress.

The common theme here is “helping people”. Yet, aside from basic task assistance for a small subset of users and some potential in medicine, AI has largely failed at every other goal.

There is no goal #

Instead, we get emoji-generating models on our phones, trained on massive, power-hungry datacenters, and draining our device’s battery and memory.

Tech companies seem directionless with AI. Even when they don’t need it, they embrace it for the sake of investments, much like crypto and NFTs before the AI hype era.

To demonstrate this, the best example comes from one the most recent and insignificant Apple ad:

Interestingly, this does not seem to raise an eyebrow from people outside the tech world, as the trend on Google Search is as

low now as it was ten years now.

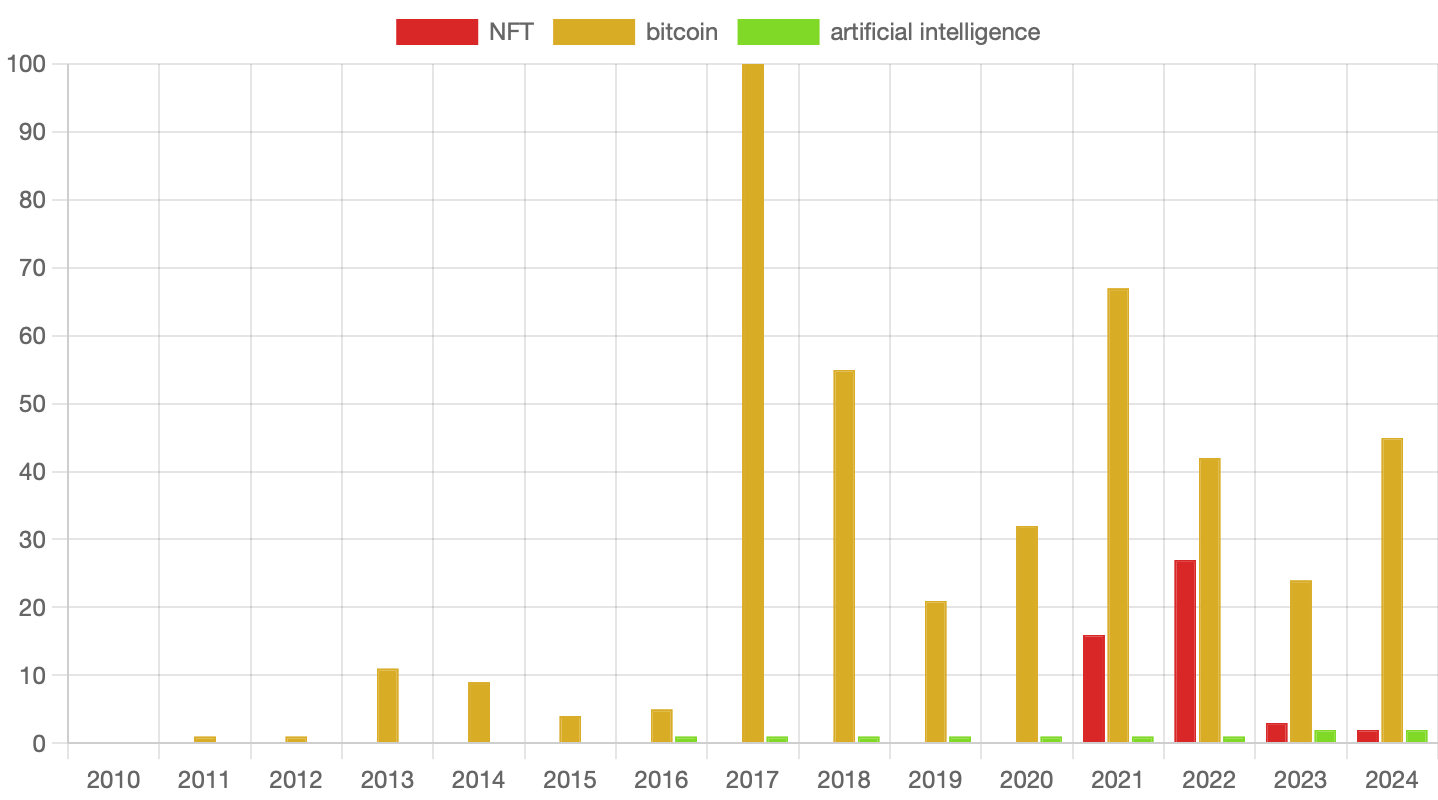

As an example, this is the Google Search trend of “Artificial Intelligence” term, compared to “NFT” and “Bitcoin”, from 2010 to 2024.

For reproduction, please follow this link.

Can you see the bubble somewhere?

The unreliability problems #

Why aren’t new AI models widely used for critical problems? I believe it’s because of their numerous reliability issues.

It’s evident that unreliability plague recent AI models (and more generally speaking: software).

They can’t be deeply tested for achieving complete tasks at an expert level and are trained on biased and often unreliable data.

Moreover, as long as AI is treated as a black box, and as long as major companies like Microsoft, Meta, Google, and OpenAI dominate development, I do think that unreliability will persist.

As an example, if new AI models are as reliable, powerful, and unbiased as claimed, why aren’t they being used to detect and block hateful content in real-time?

Instead, Meta’s solution - to this very serious problem - is to rely on… humans.

These unreliability issues raise new and complex questions about security and testing, which introduces long delays from proposition to production (as an example, you still can’t buy a self-driving car in early-2025).

Embrace innovation (not responsabilities) #

The question of responsibility for AI actions is critical, yet many companies seem indifferent to it. There have been countless examples of this on the internet in recent years, but two of them catched my attention recently:

- the aircraft company Air Canada and its responsability for its (funny) chatbot actions,

- and, in another measure, the responsability of Character.AI about the death of a teenager.

Responsibility entails moral accountability, but it seems that tech companies (outside the EU, perhaps) only care about morality when courts, or bad buzz, force them to. Meta’s solution for blocking harmful content, mentioned earlier, can actually be seen as a way to avoid legal or moral responsibility if AI decisions go wrong (’not my fault!’).

The energy crisis #

The energy crisis is another significant problem, but I guess thinking about World impacts is way too much to ask for big companies CEOs and shareholders. Instead, CEOs are too much busy to think how to replace experts with unreliable algorithms.

The wrong leaders #

Speaking of CEOs, let’s discuss “AI figures”…

I have no double those persons are smart, but I question why they’re trusted to shape the future, and why their

visions are embraced.

As an example, Sam Altman expressed his idea about replacing normal people in their jobs with unreliable algorithms.

To me, you can think of “replacing A to B” if:

Bperforms better thanAfor one or many task(s), and at any cost,- or

Bis equivalent toAin quality and delivery but cheaper.

But we are far from achieving this kind of level yet, and it seems OpenAI struggles to achieve significant

more performance (and reliability) than previous models despite increasing the models and costs.

So, why should we continue to consider Sam Altman’s thoughts while his predictions, especially about AGI, are gone wrong?

And if people lose their jobs to AI, where will they find the $30/month for AI subscriptions?

But, there is still hope #

So, all of this is what we call “The Future”? Is it really that fucked?

I hoped AI would eliminate all my everyday unpleasant tasks (dishes, make healthy meals, etc.) and allow me to focus

only on more “fulfilling“ activities, but we are still far from that…

As an example, my automatic vacuum cleaner is as dumb as it was five years ago.

The only exception I’ve seen, despite all of what I just said is DeepMind. DeepMind trully raised the bar with scientific breakthroughs for over a decade, and genuinely strives to help humanity. I encourage you to take a look at their scientific achievements portfolio.

These are all the reasons I cannot share the enthusiasm surrounding the latest AI-powered emoji generators, even if my eyes still shine for the 10-years old AlphaGo.

Special thanks to G. Hecht for its review.